日志管理

更新时间:2020-12-11

CCE 日志管理

CCE 日志管理功能帮助用户对 kubernetes 集群中的业务日志和容器日志进行管理。用户通过日志管理可以将集群中的日志输出到外部的 Elasticsearch 服务或者百度云自己的 BOS 存储中,从而对日志进行分析或者长期保存。

创建日志规则

在左侧导航栏,点击“监控日志 > 日志管理”,进入日志规则列表页。点击日志规则列表中的新建日志规则:

- 规则名称:用户自定义,用来对不同的日志规则进行标识和描述

- 日志类型:“容器标准输出“ 是指容器本身运行时输出的日志,可以通过 docker logs 命令查看,“容器内部日志”是指容器内运行的业务进程,存储在容器内部某个路径

- 集群名称和日志来源定位到需要输出日志的对象,如果选择“指定容器”,则支持在Deployment、Job、CronJob、DaemonSet、StatefulSet 五种资源维度进行选择

- Elasticsearch 的地址、端口、索引、以及加密等配置,用来帮助 CCE 日志关联服务将日志输出到对应的 Elasticsearch 服务中。请填写 Elasticsearch 服务信息,需要确保 CCE 集群可以与该 Elasticsearch 服务正确建立连接。生产环境建议直接使用 BES (百度 Elasticsearch)服务。

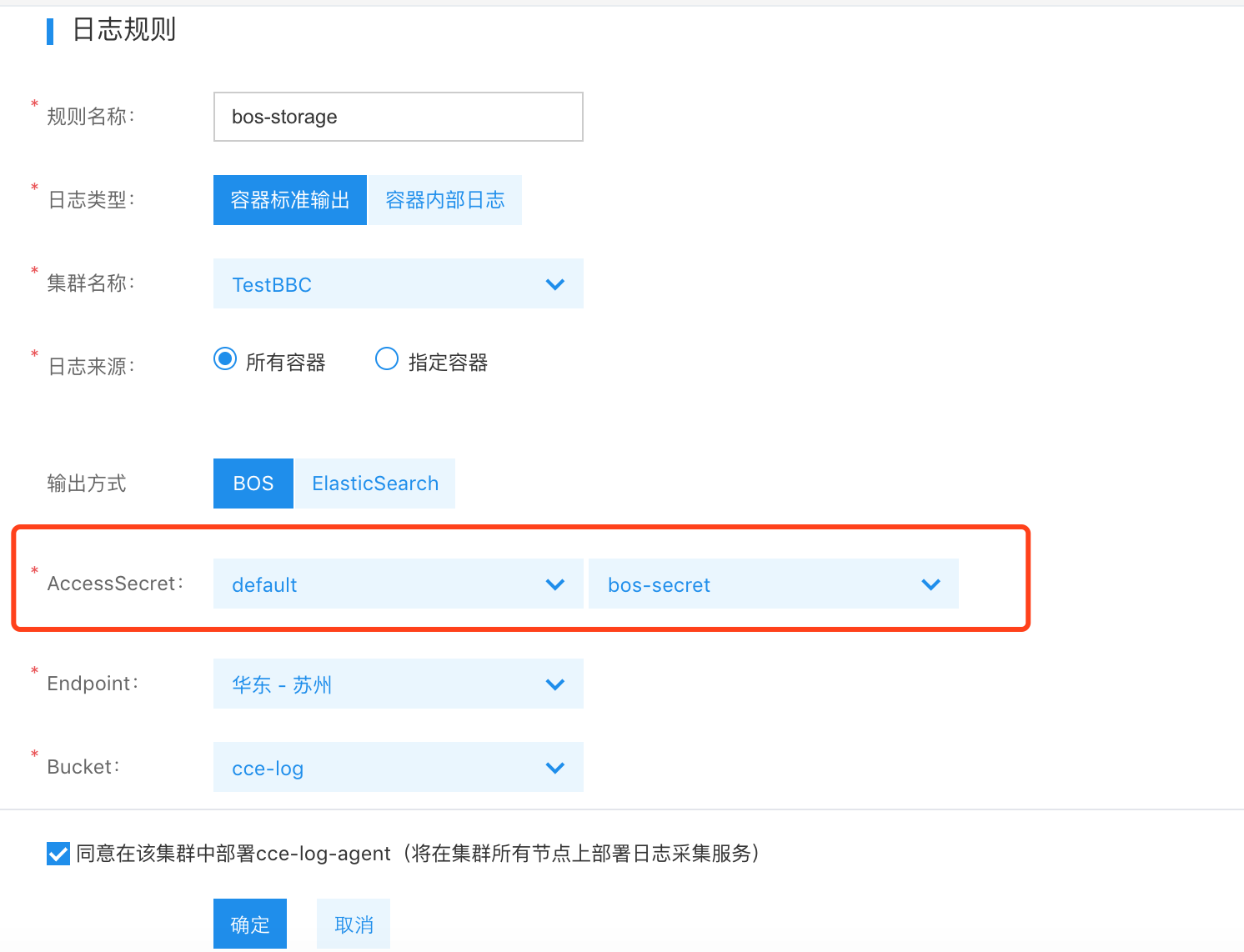

- 若要推送到 BOS 存储中需要创建一个可以连接 BOS 存储的 Secret 资源,按以下格式创建,然后在选择 BOS 存储的时候,选定该 Secret 所在的命名空间以及名字。如果机器可以连接外网,那么 BOS 的 Endpoint 可以选择任意地域,如果不能连接外网,则只能选择跟 CCE 集群一样的地域。

- 创建 BOS 的 Secret 因为权限限制的问题,目前必须使用主账户的 AK/SK,否则无法通过认证鉴权。

YAML

1apiVersion: v1

2kind: Secret

3metadata:

4 name: bos-secret

5data:

6 bosak: dXNlcm5hbWU= # echo -n "bosak...." | base64

7 bossk: cGFzc3dvcmQ= # echo -n "bossk...." | base64

配置 kubernetes 资源

在配置完日志管理规则后,需要确保kubernetes中的日志能够正确输出,因此需要在创建相关的kubernetes资源时传入指定的环境变量:

- 传入环境变量 cce_log_stdout 并指定 value 为 true 表示采集该容器的标准输出,不采集则无需传递该环境变量

- 传入环境变量 cce_log_internal 并指定 value 为容器内日志文件的绝对路径,此处需填写文件路径,不能为目录

- 采集容器内文件时需将容器内日志文件所在目录以 emptydir 形式挂载至宿主机。

请参考以下yaml示例:

YAML

1apiVersion: apps/v1

2kind: Deployment

3metadata:

4 name: tomcat

5spec:

6 selector:

7 matchLabels:

8 app: tomcat

9 replicas: 4

10 template:

11 metadata:

12 labels:

13 app: tomcat

14 spec:

15 containers:

16 - name: tomcat

17 image: "tomcat:7.0"

18 env:

19 - name: cce_log_stdout

20 value: "true"

21 - name: cce_log_internal

22 value: "/usr/local/tomcat/logs/catalina.*.log"

23 volumeMounts:

24 - name: tomcat-log

25 mountPath: /usr/local/tomcat/logs

26 volumes:

27 - name: tomcat-log

28 emptyDir: {}修改和删除日志规则

日志规则创建之后,用户可以随时对规则进行修改或者删除。点击修改可以重新编辑已经创建的日志规则,编辑页与新建页逻辑基本一致,但是不允许修改集群以及日志类型。

使用 BES (百度云 Elasticsearch) 服务

生产环境使用建议使用 BES 服务,具体参考: Elasticsearch 创建集群

K8S 集群自建 Elasticsearch (仅供参考)

本方法仅供测试,生产环境建议直接使用 BES 服务。使用如下的 yaml 文件,在 CCE 集群中部署 Elasticsearch:

YAML

1apiVersion: v1

2kind: Service

3metadata:

4 name: elasticsearch-logging

5 namespace: kube-system

6 labels:

7 k8s-app: elasticsearch-logging

8 kubernetes.io/cluster-service: "true"

9 addonmanager.kubernetes.io/mode: Reconcile

10 kubernetes.io/name: "Elasticsearch"

11spec:

12 ports:

13 - port: 9200

14 protocol: TCP

15 targetPort: db

16 selector:

17 k8s-app: elasticsearch-logging

18---

19# RBAC authn and authz

20apiVersion: v1

21kind: ServiceAccount

22metadata:

23 name: elasticsearch-logging

24 namespace: kube-system

25 labels:

26 k8s-app: elasticsearch-logging

27 kubernetes.io/cluster-service: "true"

28 addonmanager.kubernetes.io/mode: Reconcile

29---

30kind: ClusterRole

31apiVersion: rbac.authorization.k8s.io/v1

32metadata:

33 name: elasticsearch-logging

34 labels:

35 k8s-app: elasticsearch-logging

36 kubernetes.io/cluster-service: "true"

37 addonmanager.kubernetes.io/mode: Reconcile

38rules:

39- apiGroups:

40 - ""

41 resources:

42 - "services"

43 - "namespaces"

44 - "endpoints"

45 verbs:

46 - "get"

47---

48kind: ClusterRoleBinding

49apiVersion: rbac.authorization.k8s.io/v1

50metadata:

51 namespace: kube-system

52 name: elasticsearch-logging

53 labels:

54 k8s-app: elasticsearch-logging

55 kubernetes.io/cluster-service: "true"

56 addonmanager.kubernetes.io/mode: Reconcile

57subjects:

58- kind: ServiceAccount

59 name: elasticsearch-logging

60 namespace: kube-system

61 apiGroup: ""

62roleRef:

63 kind: ClusterRole

64 name: elasticsearch-logging

65 apiGroup: ""

66---

67# Elasticsearch deployment itself

68apiVersion: apps/v1

69kind: StatefulSet

70metadata:

71 name: elasticsearch-logging

72 namespace: kube-system

73 labels:

74 k8s-app: elasticsearch-logging

75 version: v6.3.0

76 kubernetes.io/cluster-service: "true"

77 addonmanager.kubernetes.io/mode: Reconcile

78spec:

79 serviceName: elasticsearch-logging

80 replicas: 2

81 selector:

82 matchLabels:

83 k8s-app: elasticsearch-logging

84 version: v6.3.0

85 template:

86 metadata:

87 labels:

88 k8s-app: elasticsearch-logging

89 version: v6.3.0

90 kubernetes.io/cluster-service: "true"

91 spec:

92 serviceAccountName: elasticsearch-logging

93 containers:

94 - image: hub.baidubce.com/jpaas-public/elasticsearch:v6.3.0

95 name: elasticsearch-logging

96 resources:

97 # need more cpu upon initialization, therefore burstable class

98 limits:

99 cpu: 1000m

100 requests:

101 cpu: 100m

102 ports:

103 - containerPort: 9200

104 name: db

105 protocol: TCP

106 - containerPort: 9300

107 name: transport

108 protocol: TCP

109 volumeMounts:

110 - name: elasticsearch-logging

111 mountPath: /data

112 env:

113 - name: "NAMESPACE"

114 valueFrom:

115 fieldRef:

116 fieldPath: metadata.namespace

117 volumes:

118 - name: elasticsearch-logging

119 emptyDir: {}

120 # Elasticsearch requires vm.max_map_count to be at least 262144.

121 # If your OS already sets up this number to a higher value, feel free

122 # to remove this init container.

123 initContainers:

124 - image: alpine:3.6

125 command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"]

126 name: elasticsearch-logging-init

127 securityContext:

128 privileged: true部署成功后将创建名为 elasticsearch-logging 的 service,如下图所示在创建日志规则时 ES 的地址可填为该 service 的名字,端口为 service 的端口:

使用如下 yaml 部署 kibana,部署成功后通过创建的名为 kibana-logging 的 LoadBalancer 访问 kibana 服务:

YAML

1apiVersion: v1

2kind: Service

3metadata:

4 name: kibana-logging

5 namespace: kube-system

6 labels:

7 k8s-app: kibana-logging

8 kubernetes.io/cluster-service: "true"

9 addonmanager.kubernetes.io/mode: Reconcile

10 kubernetes.io/name: "Kibana"

11spec:

12 ports:

13 - port: 5601

14 protocol: TCP

15 targetPort: ui

16 selector:

17 k8s-app: kibana-logging

18 type: LoadBalancer

19---

20

21apiVersion: apps/v1

22kind: Deployment

23metadata:

24 name: kibana-logging

25 namespace: kube-system

26 labels:

27 k8s-app: kibana-logging

28 kubernetes.io/cluster-service: "true"

29 addonmanager.kubernetes.io/mode: Reconcile

30spec:

31 replicas: 1

32 selector:

33 matchLabels:

34 k8s-app: kibana-logging

35 template:

36 metadata:

37 labels:

38 k8s-app: kibana-logging

39 annotations:

40 seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

41 spec:

42 containers:

43 - name: kibana-logging

44 image: hub.baidubce.com/jpaas-public/kibana:v6.3.0

45 resources:

46 # need more cpu upon initialization, therefore burstable class

47 limits:

48 cpu: 1000m

49 requests:

50 cpu: 100m

51 env:

52 - name: ELASTICSEARCH_URL

53 value: http://elasticsearch-logging:9200

54 - name: SERVER_BASEPATH

55 value: ""

56 ports:

57 - containerPort: 5601

58 name: ui

59 protocol: TCP注意

生产环境下建议使用百度智能云的 Elasticsearch 服务或者自建专用的 Elasticsearch 集群