在BML平台使用容器镜像服务CCR

更新时间:2023-07-26

在BML平台使用容器镜像服务CCR

平台支持用户在用户资源池上关联容器镜像服务CCR作为资源池的镜像仓库,在使用用户资源池提交任务时,可以使用镜像仓库中的镜像。

当前支持的容器镜像服务CCR类型:

- 容器镜像服务CCR-企业版

- 容器镜像服务CCR-个人版

当前支持使用容器镜像服务CCR提交的任务:

- 自定义作业-训练作业任务、自动搜索作业任务

前提条件

- 用户在平台上已经挂载了容器引擎CCE资源作为用户资源池,点击了解容器引擎CCE;

- 用户已经创建了容器镜像服务CCR,点击了解容器镜像服务CCR。

- 容器镜像服务CCR能够被容器引擎CCE资源访问到,也即能被对应的VPC访问到。

创建镜像仓库

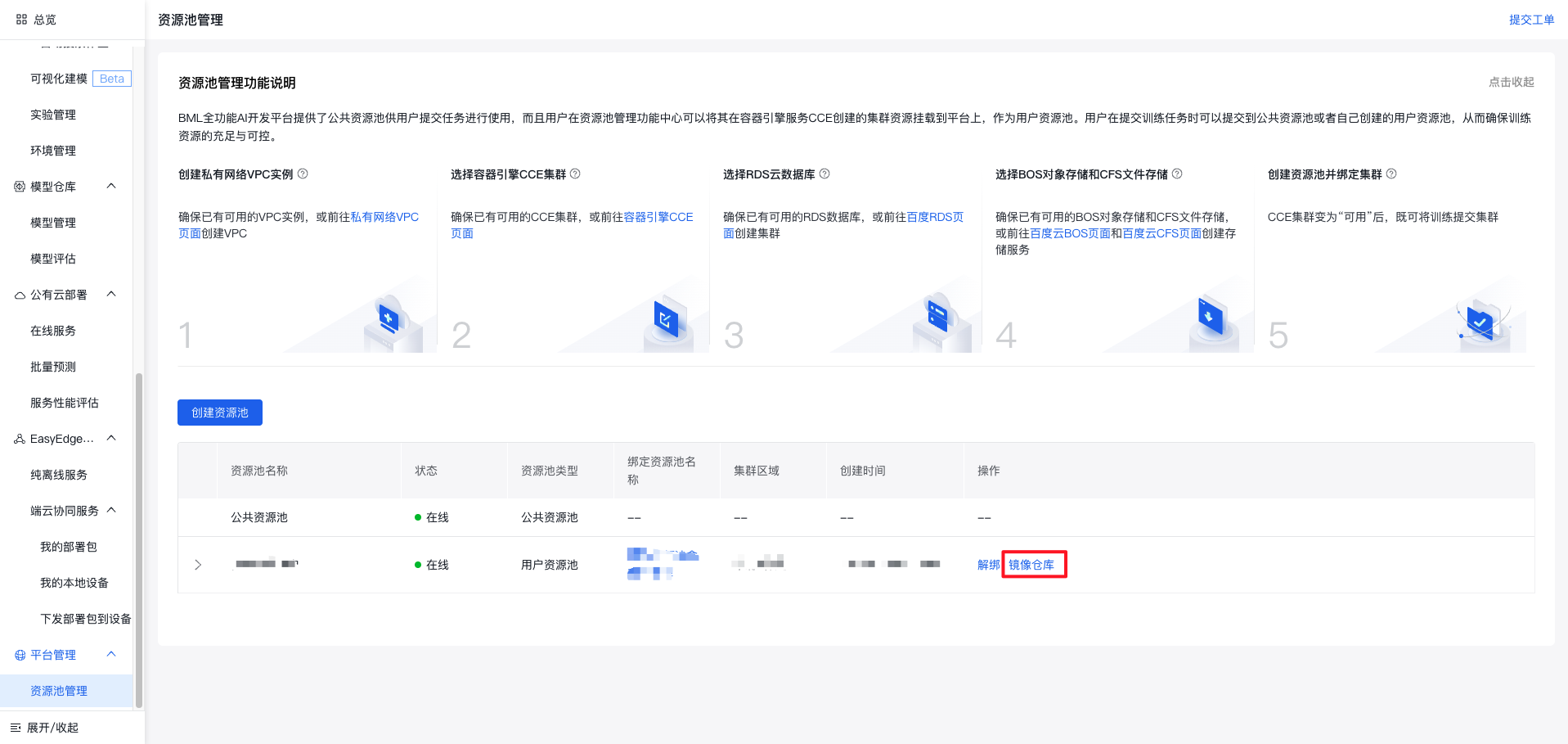

- Step1:进入平台管理-资源池管理,已挂载并运行正常的用户资源池支持“镜像仓库”的操作项,点击即可开始查看镜像仓库。

- Step2:点击镜像仓库,即可进入镜像仓库列表。

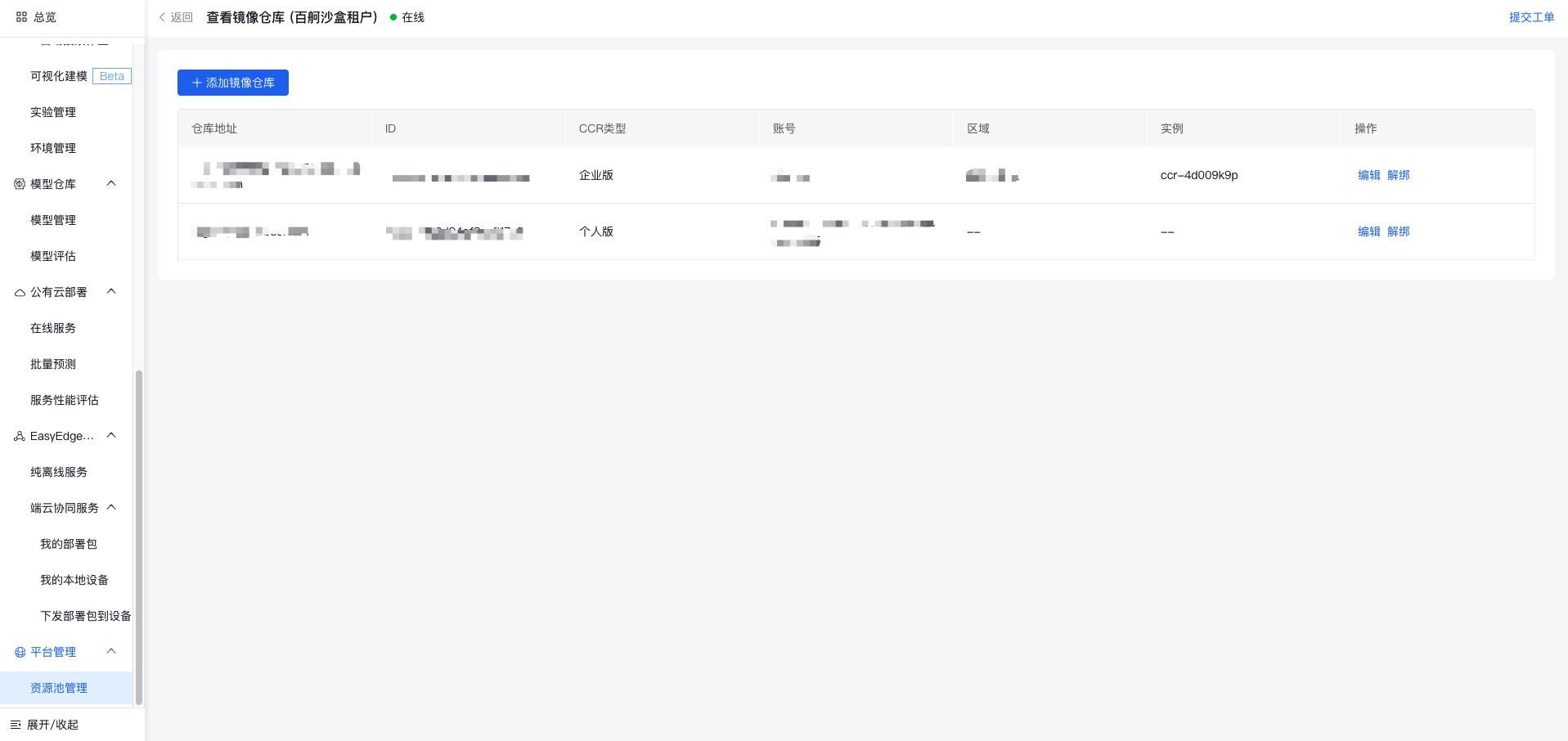

- Step3:点击添加镜像仓库,即可进入添加流程。

企业版:支持选择资源池对应区域和VPC下的,归属于主账号的容器镜像服务CCR-企业版的实例,并填写账号密码进行添加。

个人版:支持选择归属于主账号的容器镜像服务CCR-个人版的实例,并填写账号密码进行添加。

使用镜像提交自定义作业任务

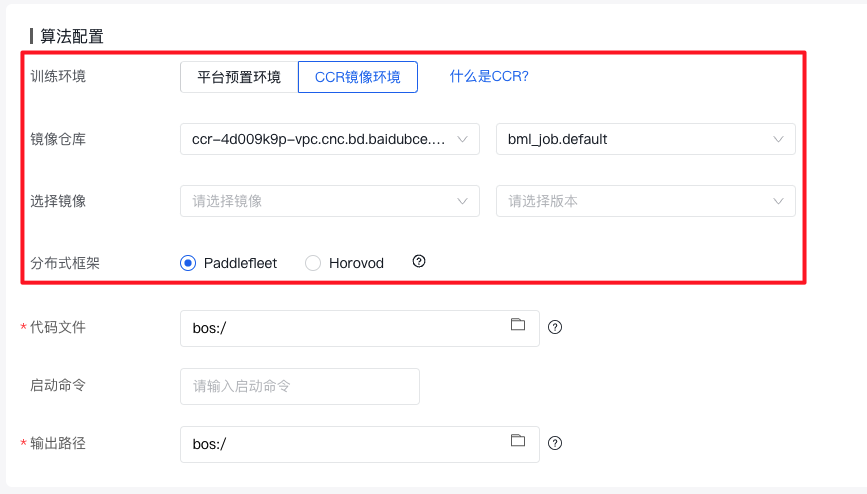

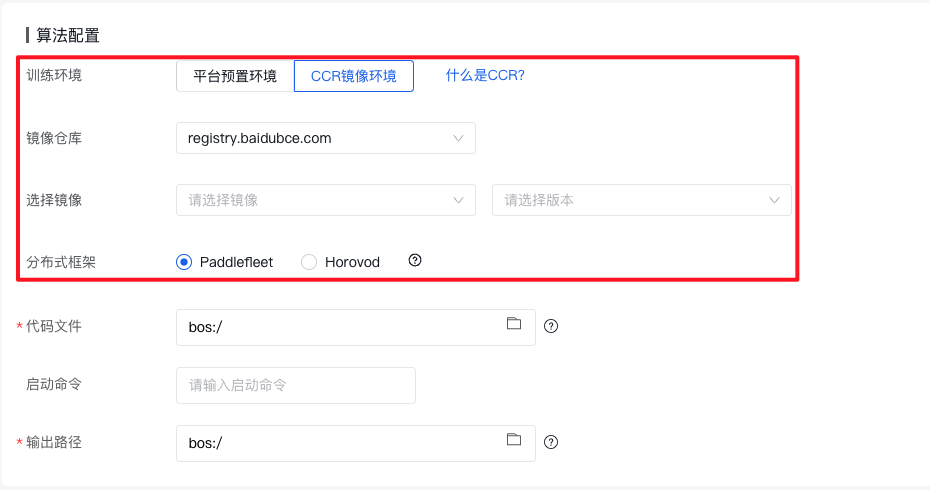

在算法配置阶段,如果用户选择了用户资源池,即支持选择该资源池所关联的CCR镜像环境提交任务。(训练作业和自动搜索作业任务的提交过程一致)

- 企业版:支持依次选择镜像仓库-命名空间-镜像-版本,从而选中一个唯一确定的镜像用于提交任务。

- 个人版:支持依次选择镜像仓库-镜像-版本,从而选中一个唯一确定的镜像用于提交任务。

在选择完镜像后,需要根据镜像中的深度学习框架来选择分布式框架,其中:

- Paddlefleet:PaddleFleet是PaddlePaddle推出的分布式图引擎以及大规模参数服务器,用于支撑⻜桨框架大规模分布式训练能力。

- Horovod:分布式训练框架,支持tensorflow、pytorch、ray、mxnet等业界著名的开源的机器学习框架。通过对底层tensorflow等框架进行上层分布式调度、分布式通信、梯度计算等封装,完成大规模集群下模型的训练。horovod主要支持在GPU资源集群上的大规模分布式的同步训练。

后续填写代码文件、启动命令、输出路径等信息后,即可提交自定义作业任务。

附录:自定义镜像规范

Paddle镜像

- python版本要求:python3.7及以上

- 安装bos:dockerfile实现

Plain Text

1RUN /bin/bash -c 'mkdir /home/bos && \

2 cd /home/bos && \

3 wget --no-check-certificate https://sdk.bce.baidu.com/console-sdk/linux-bcecmd-0.3.0.zip && \

4 unzip linux-bcecmd-0.3.0.zip && \

5 echo "export LANG=en_US.UTF-8" >> ~/.bashrc && \

6 echo "export PATH="/home/bos/linux-bcecmd-0.3.0:${PATH}"" >> ~/.bashrc && \

7 source ~/.bashrc'或者自行在镜像中安装到/home/bos/linux-bcecmd-0.3.0目录下,确保 “/home/bos/linux-bcecmd-0.3.0/bcecmd” 这句命令在命令行能弹出相关bcecmd帮助信息,即命令能被系统识别

- 安装搜索需要的 sdk

- 用户自行安装wheel包:rudder_autosearch-1.0.0-py3-none-any.whl,点击下载。

- 安装 jq 解析sts需要:apt-get install jq

- 安装 curl 解析sts需要:apt-get install curl

- 安装protobuf 搜索sdk需要:建议安装3.20.1版本 pip install protobuf==3.20.1

- 设置虚拟化环境变量初始值:dockerfile实现

Plain Text

1ENV NVIDIA_VISIBLE_DEVICES ""Sklearn镜像

Plain Text

1FROM ubuntu16.04-python3

2

3# Configure time zone

4RUN apt-get update && \

5 apt-get install -y tzdata && \

6 ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

7 dpkg-reconfigure -f noninteractive tzdata && \

8 apt-get clean

9

10RUN apt-get install -y --no-install-recommends\

11 build-essential \

12 libopencv-dev \

13 libssl-dev \

14 dnsutils \

15 unzip \

16 vim \

17 jq \

18 curl \

19 wget && \

20 apt-get clean && \

21 rm -rf /var/lib/apt/lists/*

22

23#py3

24RUN bash -c 'cd /tmp && \

25 wget --no-check-certificate https://repo.anaconda.com/miniconda/Miniconda3-py37_4.8.3-Linux-x86_64.sh && \

26 bash Miniconda3-py37_4.8.3-Linux-x86_64.sh -b -p ~/miniconda3 && \

27 echo "source ~/miniconda3/bin/activate" >> ~/.bashrc && \

28 echo "export PATH="~/miniconda3/bin:${PATH}"" >> ~/.bashrc && \

29 source ~/.bashrc && \

30 rm -rf Miniconda3-py37_4.8.3-Linux-x86_64.sh' && \

31 /root/miniconda3/bin/python -m pip config set global.index-url https://pypi.douban.com/simple/ && \

32 /root/miniconda3/bin/python -m pip install --upgrade pip && \

33 /root/miniconda3/bin/python -m pip install --upgrade setuptools && \

34 /root/miniconda3/bin/python -m pip install numpy==1.17.4 && \

35 /root/miniconda3/bin/python -m pip install albumentations==0.4.3 && \

36 /root/miniconda3/bin/python -m pip install Cython==0.29.16 && \

37 /root/miniconda3/bin/python -m pip install pycocotools==2.0.0 && \

38 /root/miniconda3/bin/python -m pip install ruamel.yaml && \

39 /root/miniconda3/bin/python -m pip install ujson && \

40 /root/miniconda3/bin/python -m pip install scipy==1.5.3 && \

41 /root/miniconda3/bin/python -m pip install scikit-learn==0.23.2 && \

42 /root/miniconda3/bin/python -m pip install pandas

43

44RUN /root/miniconda3/condabin/conda clean -p && \

45 /root/miniconda3/condabin/conda clean -t

46RUN rm -rf ~/.cache/pip

47RUN rm -rf /usr/bin/python3 && ln -s /root/miniconda3/bin/python /usr/bin/python3

48

49#sklearn

50RUN /root/miniconda3/bin/python -m pip install xgboost==1.3.1

51

52#安装bos

53RUN /bin/bash -c 'mkdir /home/bos && \

54 cd /home/bos && \

55 wget --no-check-certificate https://sdk.bce.baidu.com/console-sdk/linux-bcecmd-0.3.0.zip && \

56 unzip linux-bcecmd-0.3.0.zip && \

57 echo "export LANG=en_US.UTF-8" >> ~/.bashrc && \

58 echo "export PATH="/home/bos/linux-bcecmd-0.3.0:${PATH}"" >> ~/.bashrc && \

59 source ~/.bashrc'

60

61#添加搜索作业SDK install 3.20.1 protobuf for searchjob

62COPY rudder-autosearch-1.0.0-py3-none-any.whl /home/rudder-autosearch-1.0.0-py3-none-any.whl

63RUN pip install /home/rudder-autosearch-1.0.0-py3-none-any.whl && \

64 pip install protobuf==3.20.1

65

66ENV NVIDIA_VISIBLE_DEVICES ""

67

68ENTRYPOINT ["/bin/bash"]如果是pytorch/tensorflow的多机分布式作业,则需要安装额外的依赖包

- 安装openmpi

Plain Text

1#安装openmpi

2RUN mkdir /tmp/openmpi && \

3 cd /tmp/openmpi && \

4 wget https://download.open-mpi.org/release/open-mpi/v4.0/openmpi-4.0.0.tar.gz && \

5 tar zxf openmpi-4.0.0.tar.gz && \

6 cd openmpi-4.0.0 && \

7 ./configure --enable-orterun-prefix-by-default && \

8 make -j $(nproc) all && \

9 make install && \

10 ldconfig && \

11 rm -rf /tmp/openmpi

12# Create a wrapper for OpenMPI to allow running as root by default

13RUN mv /usr/local/bin/mpirun /usr/local/bin/mpirun.real && \

14 echo '#!/bin/bash' > /usr/local/bin/mpirun && \

15 echo 'mpirun.real --allow-run-as-root "$@"' >> /usr/local/bin/mpirun && \

16 chmod a+x /usr/local/bin/mpirun

17

18# Configure OpenMPI to run good defaults:

19# --bind-to none --map-by slot --mca btl_tcp_if_exclude lo,docker0

20RUN echo "hwloc_base_binding_policy = none" >> /usr/local/etc/openmpi-mca-params.conf && \

21 echo "rmaps_base_mapping_policy = slot" >> /usr/local/etc/openmpi-mca-params.conf && \

22 echo "btl_tcp_if_exclude = lo,docker0" >> /usr/local/etc/openmpi-mca-params.conf- 安装horvod(MPI形式的作业,需要安装horovod,并进行相关配置。horovod安装需要cmake3.13+,可以apt-get install 指定版本安装)

Plain Text

1RUN ldconfig /usr/local/cuda/targets/x86_64-linux/lib/stubs && \

2 HOROVOD_GPU_ALLREDUCE=NCCL HOROVOD_WITH_PYTORCH=1 pip install --no-cache-dir horovod && \

3 ldconfig- 安装并配置nccl

Plain Text

1# Set default NCCL parameters

2RUN echo NCCL_DEBUG=INFO >> /etc/nccl.conf- 安装openssh并配置

Plain Text

1# Install OpenSSH for MPI to communicate between containers

2RUN apt-get install -y --no-install-recommends openssh-client openssh-server && \

3 mkdir -p /var/run/sshd

4

5# Allow OpenSSH to talk to containers without asking for confirmation

6RUN cat /etc/ssh/ssh_config | grep -v StrictHostKeyChecking > /etc/ssh/ssh_config.new && \

7 echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config.new && \

8 mv /etc/ssh/ssh_config.new /etc/ssh/ssh_configpytorch/tf 镜像示例

Plain Text

1FROM cuda11.0.3-cudnn8-devel-ubuntu18.04

2

3# Configure time zone

4RUN rm /etc/apt/sources.list.d/cuda.list && rm /etc/apt/sources.list.d/nvidia-ml.list

5RUN apt-get update && \

6 DEBIAN_FRONTEND="noninteractive" TZ="Asia/Shanghai" \

7 apt-get install -y tzdata && \

8 ln -snf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime && \

9 dpkg-reconfigure -f noninteractive tzdata && \

10 apt-get clean

11

12#cmake需要大于3.13版本

13RUN apt-get install -y \

14 build-essential \

15 libopencv-dev \

16 libssl-dev \

17 dnsutils \

18 unzip \

19 vim \

20 git \

21 jq \

22 curl \

23 cmake \

24 wget \

25 ca-certificates \

26 libjpeg-dev \

27 libpng-dev \

28 wget && \

29 apt-get clean && \

30 rm -rf /var/lib/apt/lists/*

31

32#py3

33RUN bash -c 'cd /tmp && \

34 wget --no-check-certificate https://repo.anaconda.com/miniconda/Miniconda3-py37_4.8.3-Linux-x86_64.sh && \

35 bash Miniconda3-py37_4.8.3-Linux-x86_64.sh -b -p ~/miniconda3 && \

36 echo "source ~/miniconda3/bin/activate" >> ~/.bashrc && \

37 echo "export PATH="~/miniconda3/bin:${PATH}"" >> ~/.bashrc && \

38 source ~/.bashrc && \

39 rm -rf Miniconda3-py37_4.8.3-Linux-x86_64.sh' && \

40 /root/miniconda3/bin/python -m pip config set global.index-url https://pypi.douban.com/simple/ && \

41 /root/miniconda3/bin/python -m pip install --upgrade pip && \

42 /root/miniconda3/bin/python -m pip install --upgrade setuptools && \

43 /root/miniconda3/bin/python -m pip install numpy==1.17.4 && \

44 /root/miniconda3/bin/python -m pip install albumentations==0.4.3 && \

45 /root/miniconda3/bin/python -m pip install Cython==0.29.16 && \

46 /root/miniconda3/bin/python -m pip install pycocotools==2.0.0 && \

47 /root/miniconda3/bin/python -m pip install ruamel.yaml && \

48 /root/miniconda3/bin/python -m pip install ujson && \

49 /root/miniconda3/bin/python -m pip install scikit-learn==0.23.2 && \

50 /root/miniconda3/bin/python -m pip install pandas

51

52RUN /root/miniconda3/condabin/conda clean -p && \

53 /root/miniconda3/condabin/conda clean -t

54RUN rm -rf ~/.cache/pip

55RUN rm -rf /usr/bin/python3 && ln -s /root/miniconda3/bin/python /usr/bin/python3

56

57#torch

58RUN /root/miniconda3/bin/python -m pip install torch==1.7.1+cu110 torchvision==0.8.2+cu110 torchaudio===0.7.2 \

59 -f https://download.pytorch.org/whl/torch_stable.html

60

61# Install Open MPI 4.0.0

62RUN mkdir /tmp/openmpi && \

63 cd /tmp/openmpi && \

64 wget https://download.open-mpi.org/release/open-mpi/v4.0/openmpi-4.0.0.tar.gz && \

65 tar zxf openmpi-4.0.0.tar.gz && \

66 cd openmpi-4.0.0 && \

67 ./configure --enable-orterun-prefix-by-default && \

68 make -j $(nproc) all && \

69 make install && \

70 ldconfig && \

71 rm -rf /tmp/openmpi

72

73# Install Horovod, temporarily using CUDA stubs

74# /usr/local/cuda links to /usr/local/cuda-10.1

75RUN ldconfig /usr/local/cuda/targets/x86_64-linux/lib/stubs && \

76 HOROVOD_GPU_ALLREDUCE=NCCL HOROVOD_WITH_PYTORCH=1 pip install --no-cache-dir horovod && \

77 ldconfig

78

79# Create a wrapper for OpenMPI to allow running as root by default

80RUN mv /usr/local/bin/mpirun /usr/local/bin/mpirun.real && \

81 echo '#!/bin/bash' > /usr/local/bin/mpirun && \

82 echo 'mpirun.real --allow-run-as-root "$@"' >> /usr/local/bin/mpirun && \

83 chmod a+x /usr/local/bin/mpirun

84

85# Configure OpenMPI to run good defaults:

86# --bind-to none --map-by slot --mca btl_tcp_if_exclude lo,docker0

87RUN echo "hwloc_base_binding_policy = none" >> /usr/local/etc/openmpi-mca-params.conf && \

88 echo "rmaps_base_mapping_policy = slot" >> /usr/local/etc/openmpi-mca-params.conf && \

89 echo "btl_tcp_if_exclude = lo,docker0" >> /usr/local/etc/openmpi-mca-params.conf

90

91# Set default NCCL parameters

92RUN echo NCCL_DEBUG=INFO >> /etc/nccl.conf

93

94# Install OpenSSH for MPI to communicate between containers

95RUN apt-get install -y --no-install-recommends openssh-client openssh-server && \

96 mkdir -p /var/run/sshd

97

98# Allow OpenSSH to talk to containers without asking for confirmation

99RUN cat /etc/ssh/ssh_config | grep -v StrictHostKeyChecking > /etc/ssh/ssh_config.new && \

100 echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config.new && \

101 mv /etc/ssh/ssh_config.new /etc/ssh/ssh_config

102

103#安装bos

104RUN /bin/bash -c 'mkdir /home/bos && \

105 cd /home/bos && \

106 wget --no-check-certificate https://sdk.bce.baidu.com/console-sdk/linux-bcecmd-0.3.0.zip && \

107 unzip linux-bcecmd-0.3.0.zip && \

108 echo "export LANG=en_US.UTF-8" >> ~/.bashrc && \

109 echo "export PATH="/home/bos/linux-bcecmd-0.3.0:${PATH}"" >> ~/.bashrc && \

110 source ~/.bashrc'

111

112#添加搜索作业SDK install 3.20.1 protobuf for searchjob

113COPY rudder-autosearch-1.0.0-py3-none-any.whl /home/rudder-autosearch-1.0.0-py3-none-any.whl

114RUN pip install /home/rudder-autosearch-1.0.0-py3-none-any.whl && \

115 pip install protobuf==3.20.1

116

117ENV NVIDIA_VISIBLE_DEVICES ""

118

119ENTRYPOINT ["/bin/bash"]