基于GPU云服务器部署NIM

更新时间:2025-10-27

概览

NVIDIA NIM 是NVIDIA AI Enterprise的一部分,为用户提供了基于GPU加速的推理微服务容器。容器中含有预训练过的、定制化的AI模型,通过简单的命令即可完成云服务器部署。NIM 微服务对外开放了工业级标准的API,可与AI应用、开发框架和一些工作流程进行集成。容器内部的模型构建基于NVIDIA和社区的预优化的推理引擎,如NVIDIA TensorRT和TensorRT-LLM产生的推理引擎等。同时,NIM微服务针对每个基础模型的组合和运行时检测到的GPU系统,自动优化响应延迟和吞吐。容器还提供了标准的可观测数据反馈,并内置了对Kubernetes在GPU上的自动缩放的支持。

使用步骤

前提条件

1.用户需要提前注册NGC账号,同时需要生成并保存NGC的API key,具体可以参考基于GPU实例部署NGC环境。

2.已创建配置Ampere及以上架构GPU,例如A10、A800的云服务器实例。

基于GPU实例部署NIM容器

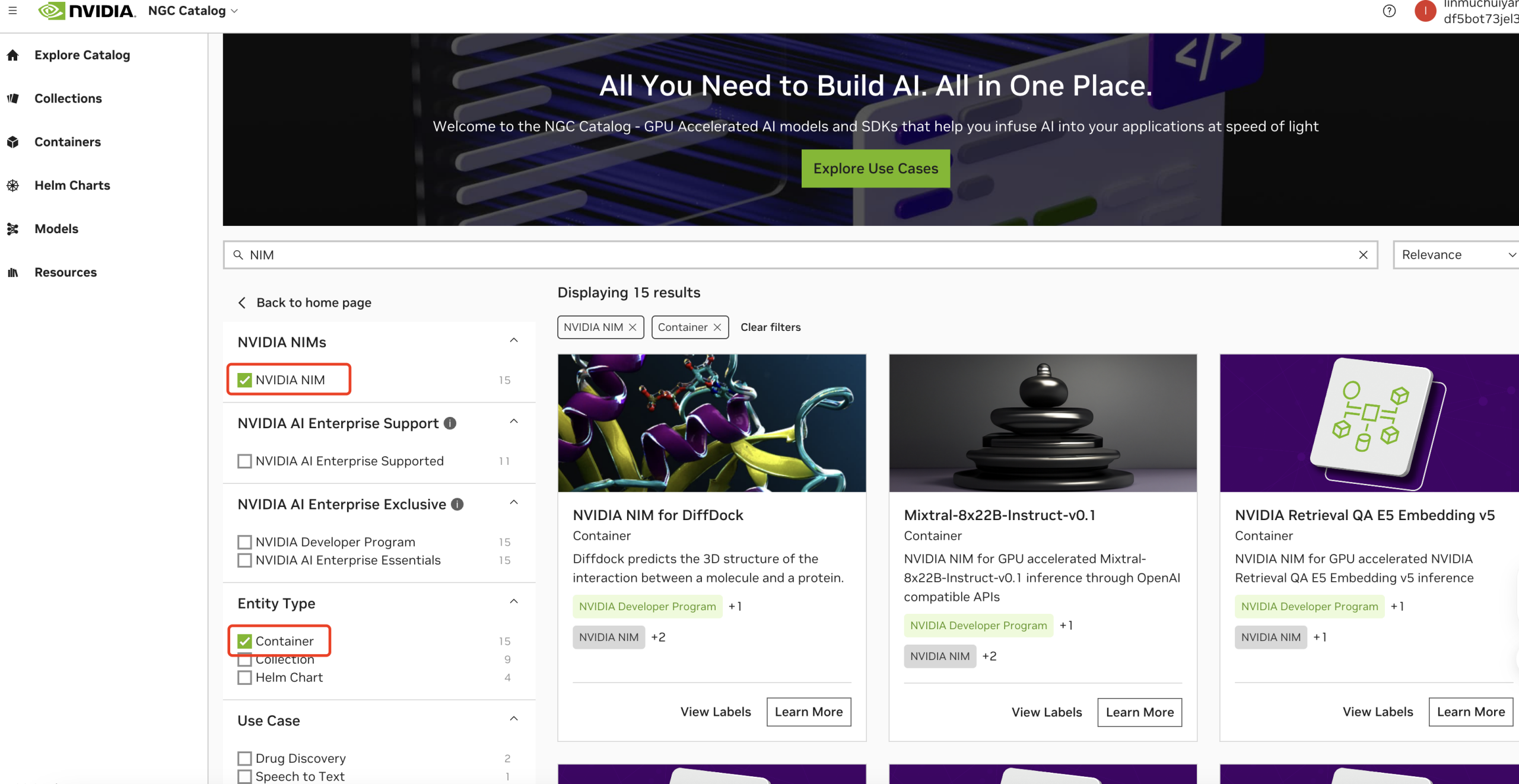

在NGC搜索界面中搜索NIM并勾选NVIDIA NIM与Container,可以看到目前NGC上提供的NIM 容器镜像。

此实践以Meta/Llama3-8b-instruct的1.0版本容器镜像为例。

此实践以Meta/Llama3-8b-instruct的1.0版本容器镜像为例。

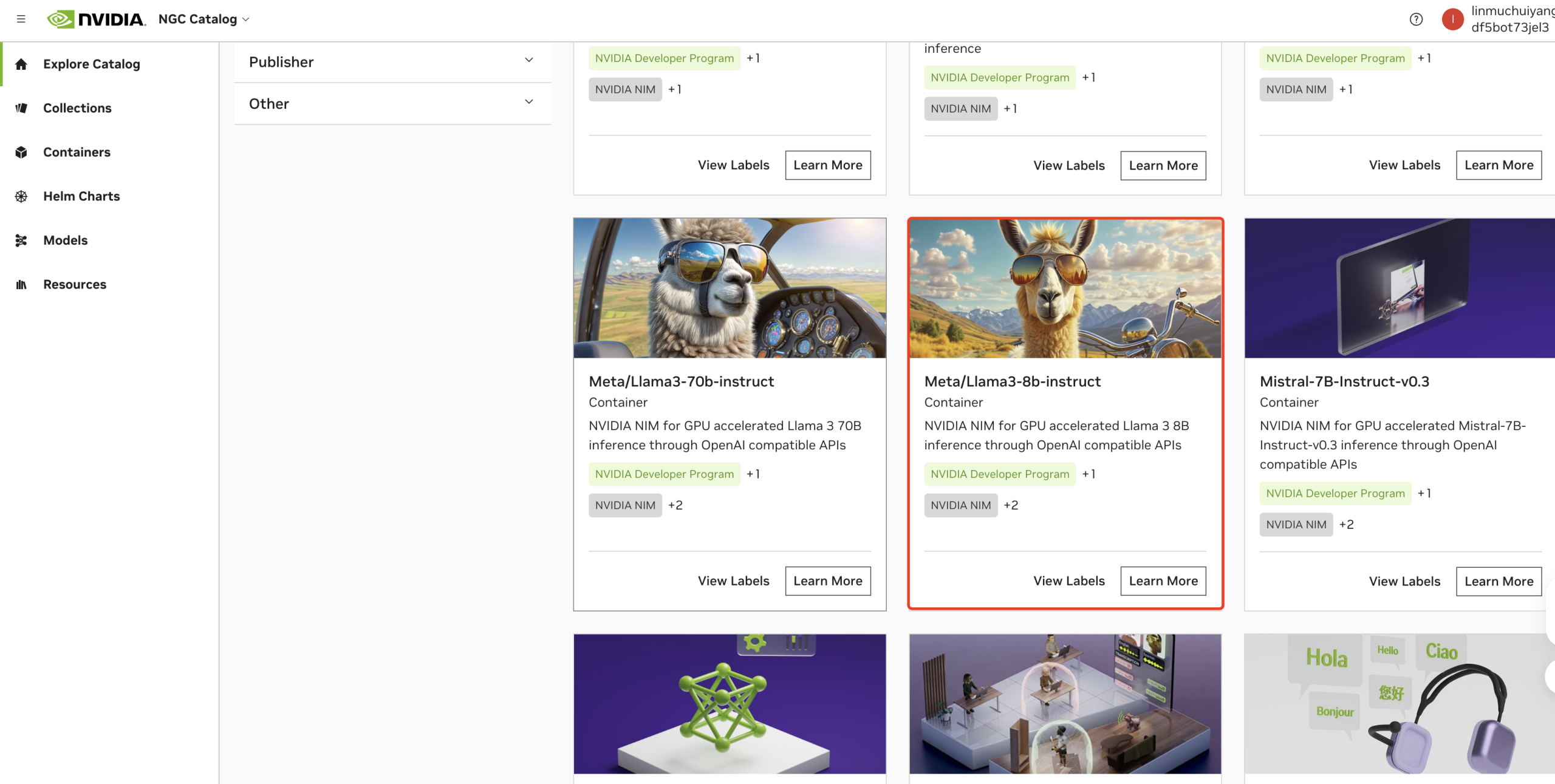

进入容器镜像页面并复制容器镜像地址(下图中红色框选处的位置)。

图2:Meta/Llama3-8b-instruct 在NGC上提供的容器镜像及其版本

图2:Meta/Llama3-8b-instruct 在NGC上提供的容器镜像及其版本

登陆GPU云服务器并参考基于GPU实例部署NGC环境拉取nvcr.io/nim/meta/llama3-8b-instruct:1.0.0的容器镜像。

通过如下命令启动容器镜像服务

Plain Text

1$export NGC_API_KEY=您的NGC API Key

2$nvidia-docker run -it --rm --name=LLaMA3-70B --shm-size=16GB -e NGC_API_KEY -v${PWD}/.cache/nim:/opt/nim/.cache -u 0 -p 8000:8000 nvcr.io/nim/meta/llama3-70b-instruct:1.0.0容器启动成功之后,预计输出如下:

Plain Text

1===========================================

2== NVIDIA Inference Microservice LLM NIM == ===========================================

3NVIDIA Inference Microservice LLM NIM Version 1.0.0

4Model: nim/meta/llama3-8b-instruct

5Container image Copyright (c) 2016-2024, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

6This NIM container is governed by the NVIDIA AI Product Agreement here: https://www.nvidia.com/en-us/data-center/products/nvidia-ai-enterprise/eula/.

7A copy of this license can be found under /opt/nim/LICENSE.

8The use of this model is governed by the AI Foundation Models Community License here: https://docs.nvidia.com/ai-foundation-models-community-license.pdf.

9ADDITIONAL INFORMATION: Meta Llama 3 Community License, Built with Meta Llama 3.

10A copy of the Llama 3 license can be found under /opt/nim/MODEL_LICENSE.

112024-06-04 09:27:50,266 [INFO] PyTorch version 2.2.2 available.

122024-06-04 09:27:50,814 [WARNING] [TRT-LLM] [W] Logger level already set from environment. Discard new verbosity: error

132024-06-04 09:27:50,814 [INFO] [TRT-LLM] [I] Starting TensorRT-LLM init.

142024-06-04 09:27:51,031 [INFO] [TRT-LLM] [I] TensorRT-LLM inited. [TensorRT-LLM] TensorRT-LLM version: 0.10.1.dev2024053000

15INFO 06-04 09:27:51.718 api_server.py:489] NIM LLM API version 1.0.0 INFO 06-04 09:27:51.719 ngc_profile.py:217] Running NIM without LoRA. Only looking for compatible profiles that do not support LoRA.

16INFO 06-04 09:27:51.719 ngc_profile.py:219] Detected 3 compatible profile(s).

17…

18INFO 06-04 09:28:07.393 api_server.py:456] Serving endpoints: 0.0.0.0:8000/openapi.json

190.0.0.0:8000/docs

200.0.0.0:8000/docs/oauth2-redirect

210.0.0.0:8000/metrics

220.0.0.0:8000/v1/health/ready

230.0.0.0:8000/v1/health/live

240.0.0.0:8000/v1/models

250.0.0.0:8000/v1/version

260.0.0.0:8000/v1/chat/completions

270.0.0.0:8000/v1/completions

28INFO 06-04 09:28:07.393 api_server.py:460] An example cURL request: curl -X 'POST' \

29'http://0.0.0.0:8000/v1/chat/completions' \

30-H 'accept: application/json' \

31-H 'Content-Type: application/json' \

32-d '{

33"model": "meta/llama3-8b-instruct",

34"messages": [

35{

36"role":"user",

37"content":"Hello! How are you?"

38},

39{

40"role":"assistant",

41"content":"Hi! I am quite well, how can I help you today?"

42},

43{

44"role":"user",

45"content":"Can you write me a song?"

46}

47],

48"top_p": 1,

49"n": 1,

50"max_tokens": 15,

51"stream": true,

52"frequency_penalty": 1.0,

53"stop": ["hello"]

54}'

55INFO 06-04 09:28:07.433 server.py:82] Started server process [32]

56INFO 06-04 09:28:07.433 on.py:48] Waiting for application startup.

57INFO 06-04 09:28:07.438 on.py:62] Application startup complete.

58INFO 06-04 09:28:07.438 server.py:214] Uvicorn running on http://0.0.0.0:8000 (Press CTRL+C to quit)访问服务

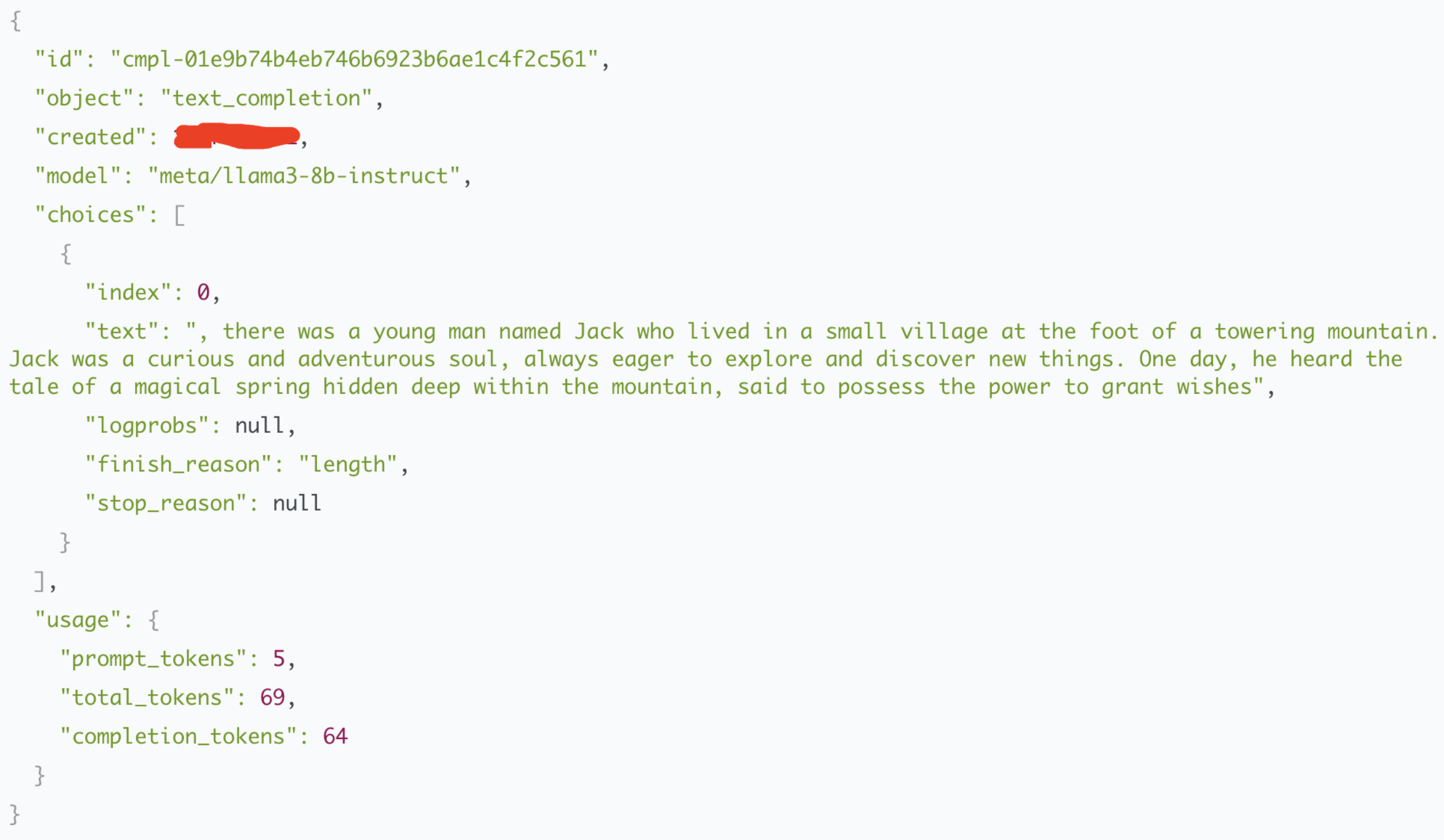

使用curl命令验证推理服务,这里举了一个文本续写的例子。

Plain Text

1$curl -s http://0.0.0.0:8000/v1/completions -H 'accept: application/json' -H 'Content-Type: application/json' -d '{"model":"meta/llama3-8b-instruct", "prompt": "Once upon a time", "max_tokens":64}' | jq 预期输出结果如下: